MOBDrone: a Drone Video Dataset for Man OverBoard Rescue

D. Cafarelli, L. Ciampi, L. Vadicamo, C. Gennaro, A. Berton, M. Paterni, C. Benvenuti, M. Passera, F. Falchi

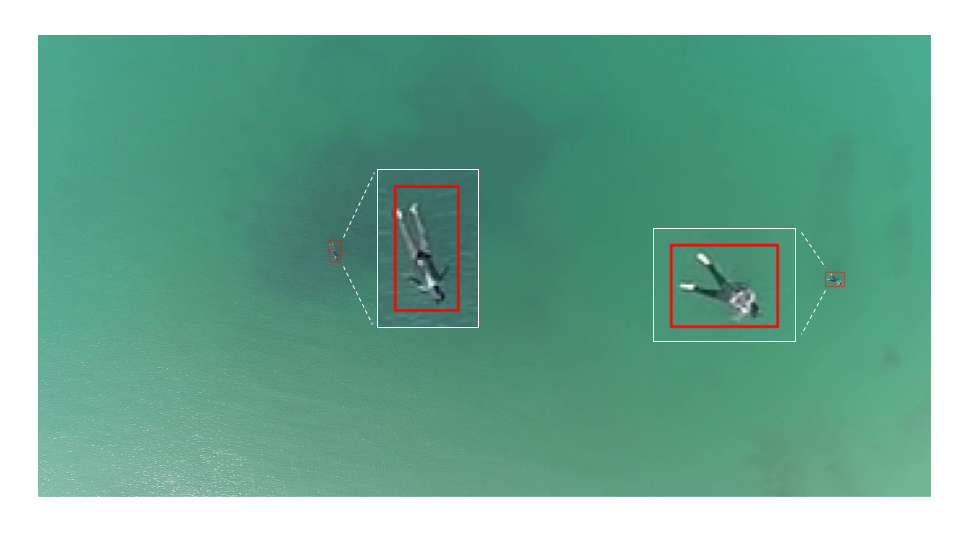

Modern Unmanned Aerial Vehicles (UAV) equipped with cameras can play an essential role in speeding up the identification and rescue of people who have fallen overboard, i.e., man overboard (MOB). To this end, Artificial Intelligence techniques can be leveraged for the automatic understanding of visual data acquired from drones. However, detecting people at sea in aerial imagery is challenging primarily due to the lack of specialized annotated datasets for training and testing detectors for this task. To fill this gap, we introduce and publicly release the MOBDrone benchmark, a collection of more than 125K drone-view images in a marine environment under several conditions, such as different altitudes, camera shooting angles, and illumination. We manually annotated more than 180K objects, of which about 113K man overboard, precisely localizing them with bounding boxes. Moreover, we conduct a thorough performance analysis of several state-of-the-art object detectors on the MOBDrone data, serving as baselines for further research.

Papers

- MOBDrone: a Drone Video Dataset for Man OverBoard Rescue (Pre-Print, 4.80MB). The paper will be presented at ICIAP 2021.

Dataset

The Man OverBoard Drone (MOBDrone) dataset is a large-scale collection of aerial footage images. It contains 126,170 frames extracted from 66 video clips gathered from one UAV flying at an altitude of 10 to 60 meters above the mean sea level. Images are manually annotated with more than 180K bounding boxes localizing objects belonging to 5 categories — person, boat, lifebuoy, surfboard, wood. More than 113K of these bounding boxes belong to the person category and localize people in the water simulating the need to be rescued

You can download different packages of the dataset using the following links:

- videos (5.5 GB) 66 Full HD video clips

- images.zip (243 GB) 126,170 images extracted from the videos at a rate of 30 FPS

- 3 annotation files for the extracted images that follow the MS COCO data format (for more info see https://cocodataset.org/#format-data):

- annotations_5_custom_classes.json (60.5MB): this file contains annotations concerning all five categories; please note that class ids do not correspond with the ones provided by the MS COCO standard since we account for two new classes not previously considered in the MS COCO dataset — lifebuoy and wood

- annotations_3_coco_classes.json (54.6MB): this file contains annotations concerning the three classes also accounted by the MS COCO dataset — person, boat, surfboard. Class ids correspond with the ones provided by the MS COCO standard.

- annotations_person_coco_classes.json (45.3MB): this file contains annotations concerning only the ‘person‘ class. Class id corresponds to the one provided by the MS COCO standard.

- http://cloudone.isti.cnr.it/rescue_drone_dataset/predictions.zip (330MB): predictions using several pre-trained neural networks (including VfNet, TOOD, Detr).

Our dataset is intended as a test data benchmark, therefore it is not split into training and test parts. However, for researchers interested in using our data also for training purposes we highly suggest adopting the following data split:

- Test set: All the images whose filename starts with “DJI_0804” (total: 37,604 images)

- Training set: All the images whose filename starts with “DJI_0915” (total: 88,568 images)

More details about data generation and the evaluation protocol can be found at our MOBDrone paper: https://arxiv.org/abs/2203.07973

The code to reproduce our results is available at this GitHub Repository.

Cite our Work

The MOBDrone is released under a Creative Commons Attribution license, so please cite the MOBDrone if it is used in your work in any form. Published academic papers should use the academic paper citation for our MOBDrone paper, where we evaluated several pre-trained state-of-the-art object detectors focusing on the detection of the overboard people

@inproceedings{MOBDrone2021,

title={MOBDrone: a Drone Video Dataset for Man OverBoard Rescue},

author={Donato Cafarelli and Luca Ciampi and Lucia Vadicamo and Claudio Gennaro and Andrea Berton and Marco Paterni and Chiara Benvenuti and Mirko Passera and Fabrizio Falchi},

booktitle={Image Analysis and Processing -- ICIAP 2022},

year={2022},

publisher={Springer International Publishing},

address={Cham},

pages={633--644},

}

and the Zenodo Dataset

@dataset{donato_cafarelli_2022_5996890,

author={Donato Cafarelli and Luca Ciampi and Lucia Vadicamo and Claudio Gennaro and Andrea Berton and Marco Paterni and Chiara Benvenuti and Mirko Passera and Fabrizio Falchi},

title = {{MOBDrone: a large-scale drone-view dataset for man overboard detection}},

month = feb,

year = 2022,

publisher = {Zenodo},

version = {1.0.0},

doi = {10.5281/zenodo.5996890},

url = {https://doi.org/10.5281/zenodo.5996890}

}

Personal works, such as machine learning projects/blog posts, should provide a URL to the MOBDrone Zenodo page (https://doi.org/10.5281/zenodo.5996890), though a reference to our MOBDrone paper would also be appreciated.

Contact Information

If you would like further information about the MOBDrone or if you experience any issues downloading files, please contact us at mobdrone[at]isti.cnr.it

Acknowledgements

This work was partially supported by NAUSICAA – “NAUtical Safety by means of Integrated Computer-Assistance Appliances 4.0” project funded by the Tuscany region (CUP D44E20003410009). The data collection was carried out with the collaboration of the Fly&Sense Service of the CNR of Pisa – for the flight operations of remotely piloted aerial systems – and of the Institute of Clinical Physiology (IFC) of the CNR – for the water immersion operations.